Blue/Green Migration¶

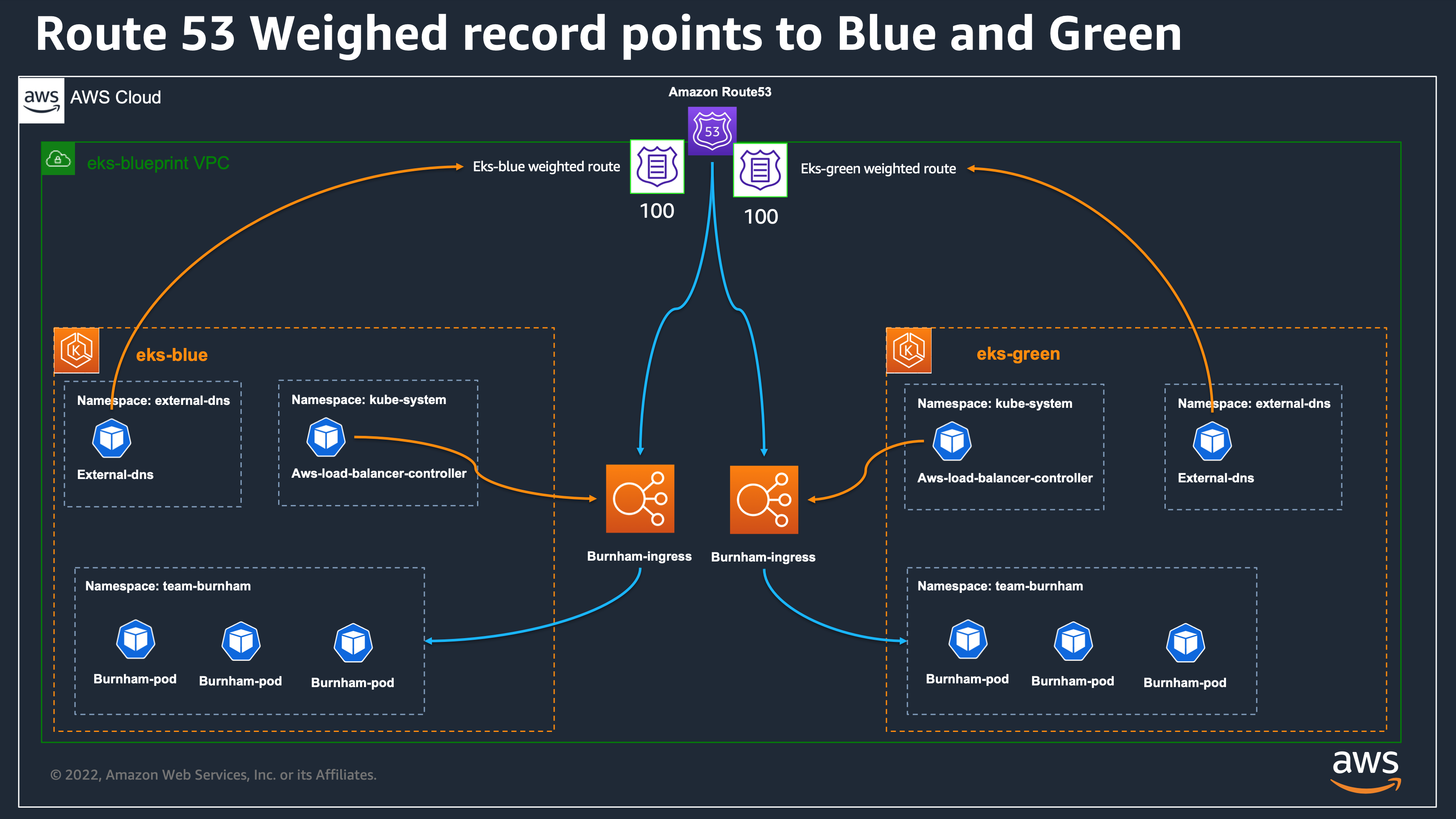

This directory provides a solution based on EKS Blueprint for Terraform that shows how to leverage blue/green or canary application workload migration between EKS clusters, using Amazon Route 53 weighted routing feature. The workloads will be dynamically exposed using AWS LoadBalancer Controller and External DNS add-on.

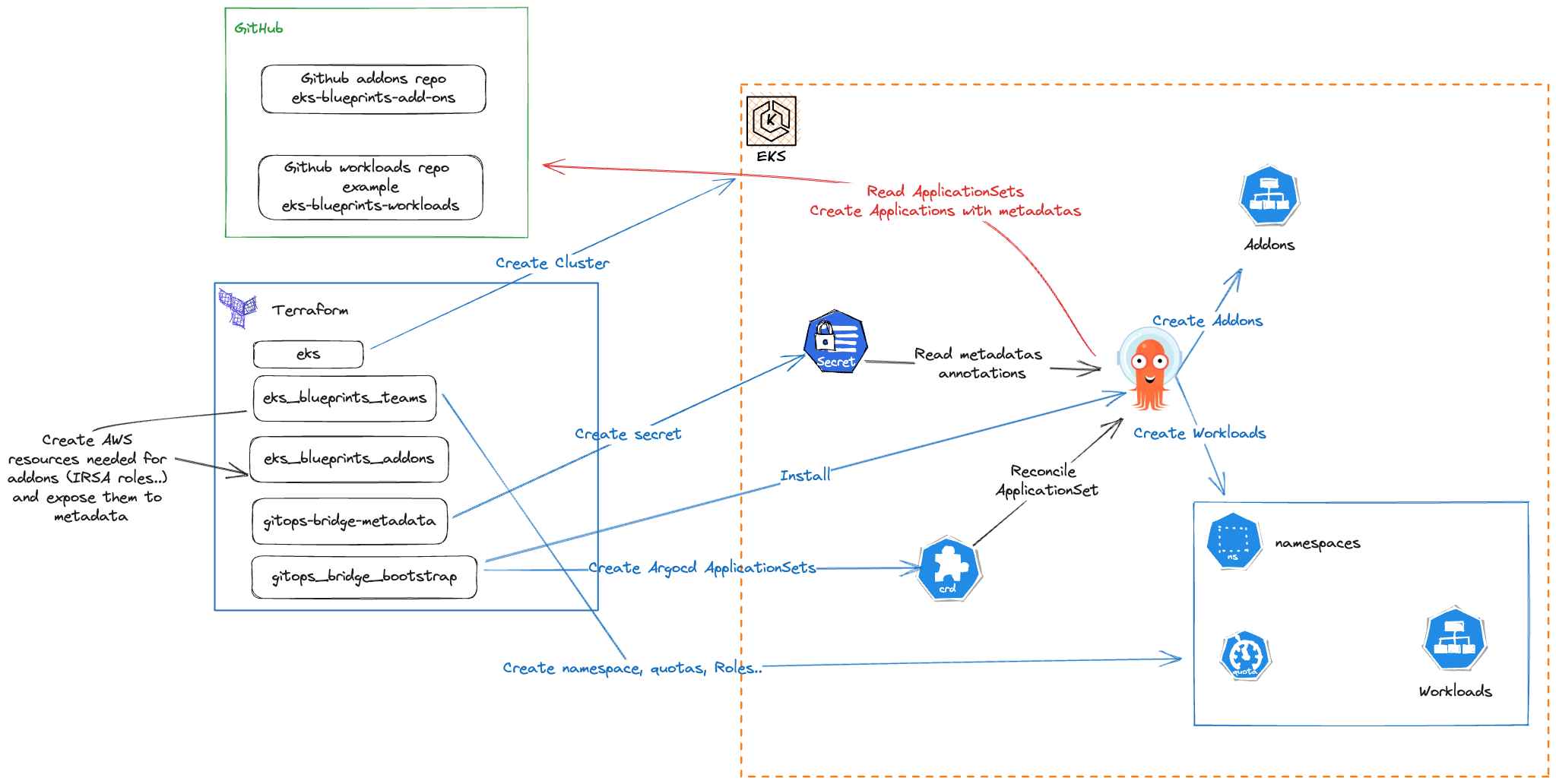

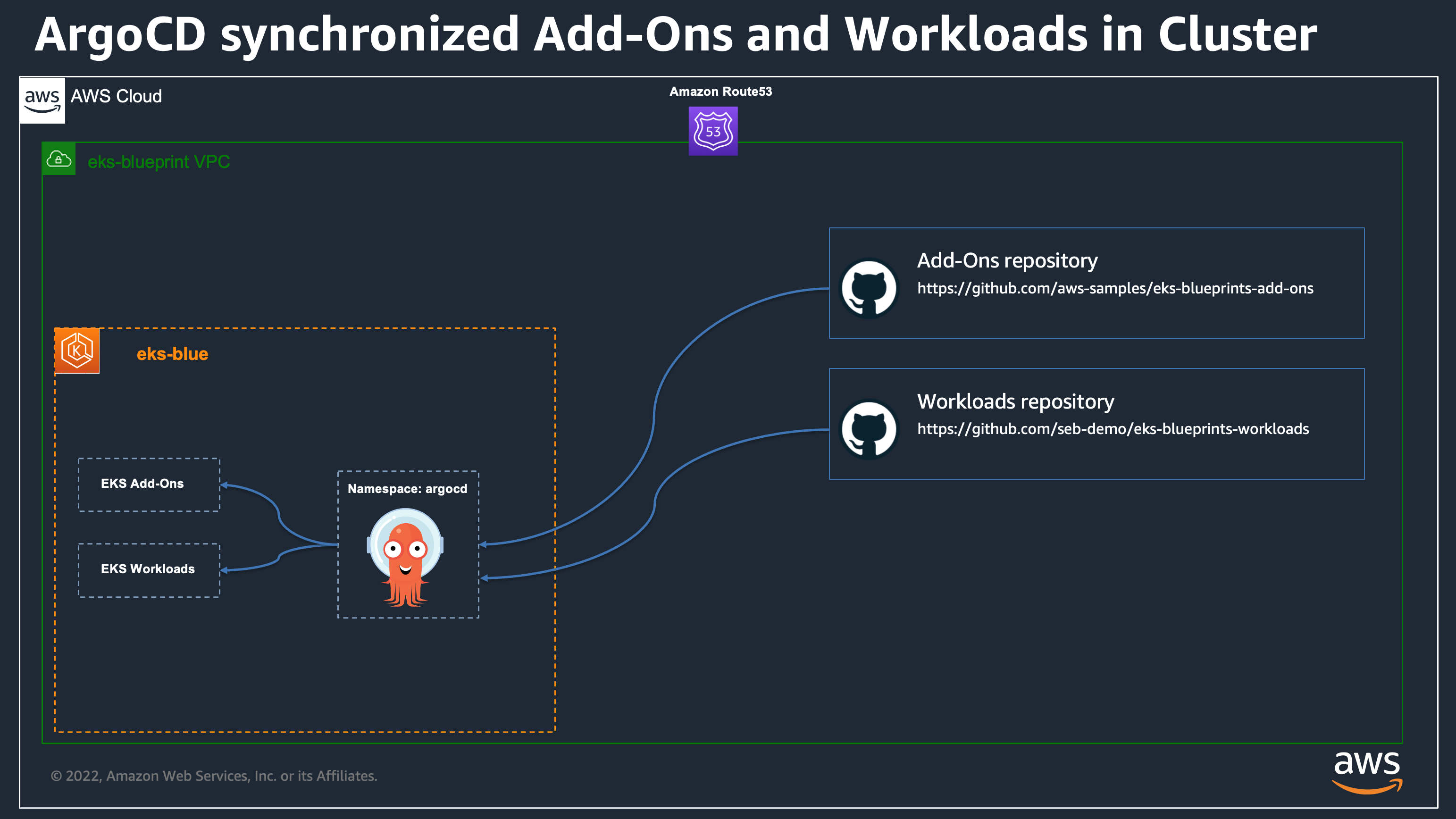

We are leveraging the existing EKS Blueprints Workloads GitHub repository sample to deploy our GitOps ArgoCD applications, which are defined as helm charts. We are leveraging ArgoCD Apps of apps pattern where an ArgoCD Application can also reference other Helm charts to deploy.

You can also find more informations in the associated blog post

Table of content¶

- Blue/Green Migration

- Table of content

- Project structure

- Prerequisites

- Quick Start

- How this work

- Automate the migration from Terraform

- Delete the Stack

- Troubleshoot

Project structure¶

See the Architecture of what we are building

Our sample is composed of four main directory:

- environment → this stack will create the common VPC and its dependencies used by our EKS clusters: create a Route53 sub domain hosted zone for our sample, a wildcard certificate on Certificate Manager for our applications TLS endpoints, and a SecretManager password for the ArgoCD UIs.

- modules/eks_cluster → local module defining the EKS blueprint cluster with ArgoCD add-on which will automatically deploy additional add-ons and our demo workloads

- eks-blue → an instance of the eks_cluster module to create blue cluster

- eks-green → an instance of the eks_cluster module to create green cluster

So we are going to create 2 EKS clusters, sharing the same VPC, and each one of them will install locally our workloads from the central GitOps repository leveraging ArgoCD add-on. In the GitOps workload repository, we have configured our applications deployments to leverage AWS Load Balancers Controllers annotations, so that applications will be exposed on AWS Load Balancers, created from our Kubernetes manifests. We will have 1 load balancer per cluster for each of our applications.

We have configured ExternalDNS add-ons in our two clusters to share the same Route53 Hosted Zone. The workloads in both clusters also share the same Route 53 DNS records, we rely on AWS Route53 weighted records to allow us to configure canary workload migration between our two EKS clusters.

We are leveraging the gitops-bridge-argocd-bootstrap terraform module that allow us to dynamically provide metadatas from Terraform to ArgoCD deployed in the cluster. For doing this, the module will extract all metadatas from the terraform-aws-eks-blueprints-addons module, configured to create all resources except installing the addon's Helm chart. The addon Installation will be delegate to ArgoCD Itself using the eks-blueprints-add-ons git repository containing ArgoCD ApplicaitonSets for each supported Addons.

The gitops-bridge will create a secret in the EKS cluster containing all metadatas that will be dynamically used by ArgoCD ApplicationSets at deployment time, so that we can adapt their configuration to our EKS cluster context.

Our objective here is to show you how Application teams and Platform teams can configure their infrastructure and workloads so that application teams are able to deploy autonomously their workloads to the EKS clusters thanks to ArgoCD, and platform team can keep the control of migrating production workloads from one cluster to another without having to synchronized operations with applications teams, or asking them to build a complicated CD pipeline.

In this example we show how you can seamlessly migrate your stateless workloads between the 2 clusters for a blue/green or Canary migration, but you can also leverage the same architecture to have your workloads for example separated in different accounts or regions, for either High Availability or Lower latency Access from your customers.

Prerequisites¶

- Terraform (tested version v1.3.5 on linux)

- Git

- AWS CLI

- AWS test account with administrator role access

- For working with this repository, you will need an existing Amazon Route 53 Hosted Zone that will be used to create our project hosted zone. It will be provided via the Terraform variable

hosted_zone_namedefined in terraform.tfvars.example. - Before moving to the next step, you will need to register a parent domain with AWS Route 53 (https://docs.aws.amazon.com/Route53/latest/DeveloperGuide/domain-register.html) in case you don’t have one created yet.

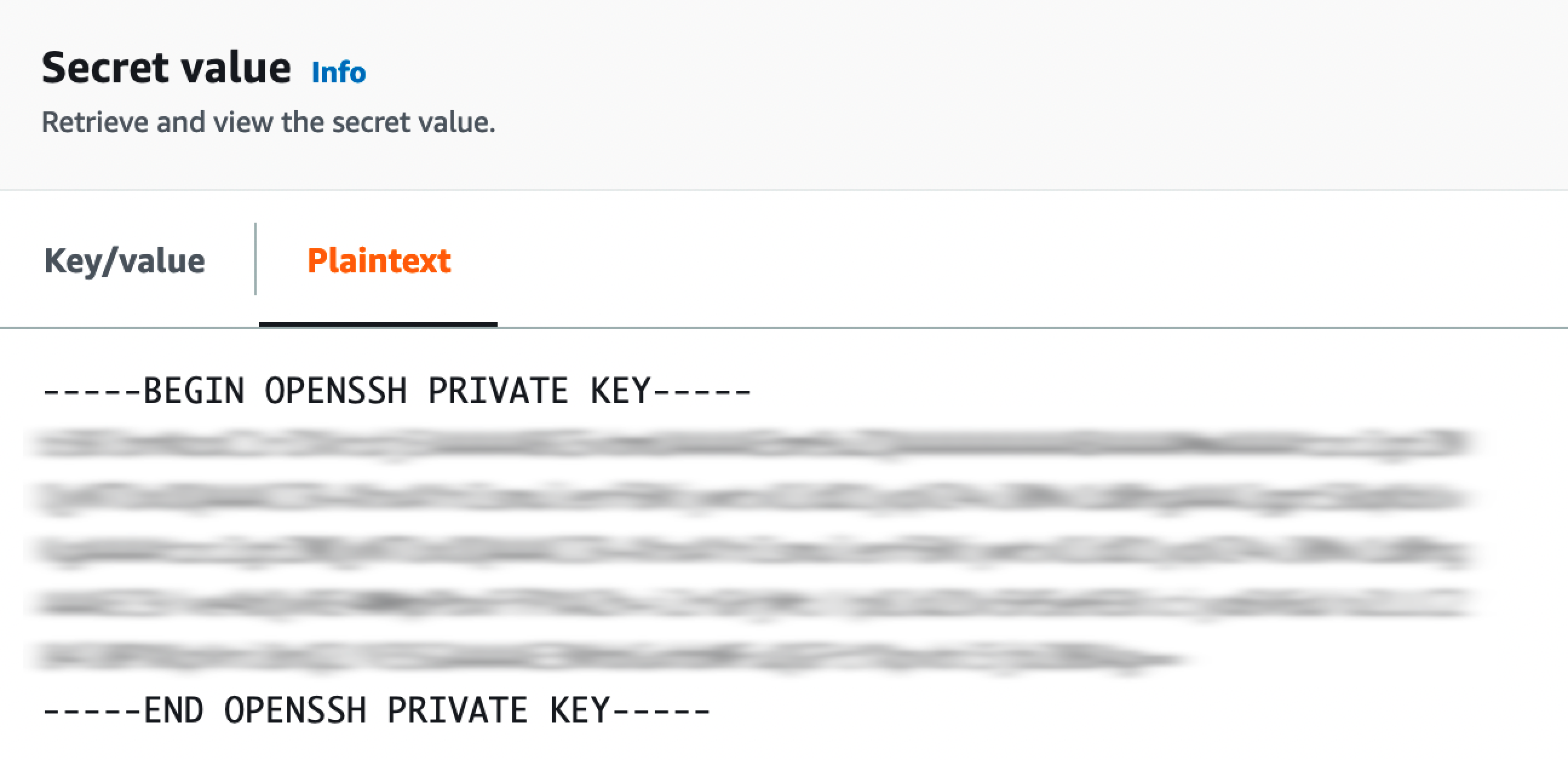

- Accessing GitOps git repositories with SSH access requiring an SSH key for authentication. In this example our workloads repositories are stored in GitHub, you can see in GitHub documentation on how to connect with SSH.

- Your GitHub private ssh key value is supposed to be stored in plain text in AWS Secret Manager in a secret named

github-blueprint-ssh-key, but you can change it using the terraform variableworkload_repo_secretin terraform.tfvars.example.

Quick Start¶

Configure the Stacks¶

- Clone the repository

git clone https://github.com/aws-ia/terraform-aws-eks-blueprints.git

cd patterns/blue-green-upgrade/

- Copy the

terraform.tfvars.exampletoterraform.tfvarsand symlink it on eachenvironment,eks-blueandeks-greenfolders, and change region, hosted_zone_name, eks_admin_role_name according to your needs.

cp terraform.tfvars.example terraform.tfvars

ln -s ../terraform.tfvars environment/terraform.tfvars

ln -s ../terraform.tfvars eks-blue/terraform.tfvars

ln -s ../terraform.tfvars eks-green/terraform.tfvars

- You will need to provide the

hosted_zone_namefor examplemy-example.com. Terraform will create a new hosted zone for the project with name:${environment}.${hosted_zone_name}so in our exampleeks-blueprint.my-example.com. - You need to provide a valid IAM role in

eks_admin_role_nameto have EKS cluster admin rights, generally the one uses in the EKS console.

Create the environment stack¶

More info in the environment Readme

There can be some Warnings due to not declare variables. This is normal and you can ignore them as we share the same

terraform.tfvarsfor the 3 projects by using symlinks for a unique file, and we declare some variables used for the eks-blue and eks-green directory

Create the Blue cluster¶

More info in the eks-blue Readme, you can also see the detailed step in the local module Readme

This can take 8mn for EKS cluster, 15mn

Create the Green cluster¶

By default the only differences in the 2 clusters are the values defined in main.tf. We will change those values to upgrade Kubernetes version of new cluster, and to migrate our stateless workloads between clusters.

How this work¶

Watch our Workload: we focus on team-burnham namespace.¶

Our clusters are configured with existing ArgoCD Github repository that is synchronized into each of the clusters:

We are going to look after one of the application deployed from the workload repository as example to demonstrate our migration automation: the Burnham workload in the team-burnham namespace.

We have set up a simple go application than can respond in it's body the name of the cluster it is running on. With this it will be easy to see the current migration on our workload.

<head>

<title>Hello EKS Blueprint</title>

</head>

<div class="info">

<h>Hello EKS Blueprint Version 1.4</h>

<p><span>Server address:</span> <span>10.0.2.201:34120</span></p>

<p><span>Server name:</span> <span>burnham-9d686dc7b-dw45m</span></p>

<p class="smaller"><span>Date:</span> <span>2022.10.13 07:27:28</span></p>

<p class="smaller"><span>URI:</span> <span>/</span></p>

<p class="smaller"><span>HOST:</span> <span>burnham.eks-blueprint.mon-domain.com</span></p>

<p class="smaller"><span>CLUSTER_NAME:</span> <span>eks-blueprint-blue</span></p>

</div>

The application is deployed from our

Connect to the cluster: Execute one of the EKS cluster login commands from the terraform output command, depending on the IAM role you can assume to access to the cluster. If you want EKS Admin cluster, you can execute the command associated to the eks_blueprints_admin_team_configure_kubectl output. It should be something similar to:

aws eks --region eu-west-3 update-kubeconfig --name eks-blueprint-blue --role-arn arn:aws:iam::0123456789:role/admin-team-20230505075455219300000002

Note it will allow the role associated to the parameter eks_admin_role_name to assume the role.

You can also connect with the user who created the EKS cluster without specifying the --role-arn parameter

Next, you can interact with the cluster and see the deployment

$ kubectl get deployment -n team-burnham -l app=burnham

NAME READY UP-TO-DATE AVAILABLE AGE

burnham 3/3 3 3 3d18h

See the pods

$ kubectl get pods -n team-burnham -l app=burnham

NAME READY STATUS RESTARTS AGE

burnham-7db4c6fdbb-82hxn 1/1 Running 0 3d18h

burnham-7db4c6fdbb-dl59v 1/1 Running 0 3d18h

burnham-7db4c6fdbb-hpq6h 1/1 Running 0 3d18h

See the logs:

$ kubectl logs -n team-burnham -l app=burnham

2022/10/10 12:35:40 {url: / }, cluster: eks-blueprint-blue }

2022/10/10 12:35:49 {url: / }, cluster: eks-blueprint-blue }

You can make a request to the service, and filter the output to know on which cluster it runs:

$ URL=$(echo -n "https://" ; kubectl get ing -n team-burnham burnham-ingress -o json | jq ".spec.rules[0].host" -r)

$ curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

eks-blueprint-blue

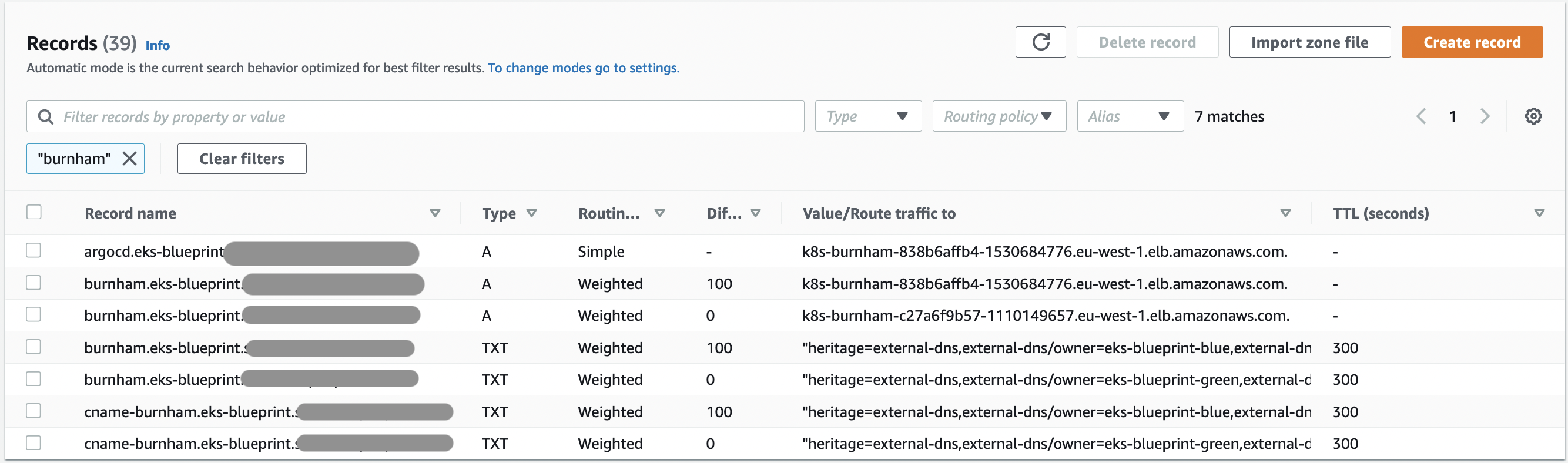

Using AWS Route53 and External DNS¶

We have configured both our clusters to configure the same Amazon Route 53 Hosted Zones. This is done by having the same configuration of ExternalDNS add-on in main.tf:

This is the Terraform configuration to configure the ExternalDNS Add-on which is deployed by the Blueprint using ArgoCD. we specify the Route53 zone that external-dns needs to monitor.

we also configure the addons_metadata to provide more configurations to external-dns:

- We use ExternalDNS in

syncmode so that the controller can create but also remove DNS records accordingly to service or ingress objects creation. - We also configured the

txtOwnerIdwith the name of the cluster, so that each controller will be able to create/update/delete records but only for records which are associated to the proper OwnerId. - Each Route53 record will be also associated with a

txtrecord. This record is used to specify the owner of the associated record and is in the form of:

"heritage=external-dns,external-dns/owner=eks-blueprint-blue,external-dns/resource=ingress/team-burnham/burnham-ingress"

So in this example the Owner of the record is the external-dns controller, from the eks-blueprint-blue EKS cluster, and correspond to the Kubernetes ingress resource names burnham-ingress in the team-burnham namespace.

Using this feature, and relying on weighted records, we will be able to do blue/green or canary migration by changing the weight of ingress resources defined in each cluster.

Configure Ingress resources with weighted records¶

Since we have configured ExternalDNS add-on, we can now defined specific annotation in our ingress object. You may already know that our workload are synchronized using ArgoCD from our workload repository sample.

We are focusing on the burnham deployment, which is defined here where we configure the burnham-ingress ingress object with:

external-dns.alpha.kubernetes.io/set-identifier: {{ .Values.spec.clusterName }}

external-dns.alpha.kubernetes.io/aws-weight: '{{ .Values.spec.ingress.route53_weight }}'

We rely on two external-dns annotation to configure how the record will be created. the set-identifier annotation will contain the name of the cluster we want to create, which must match the one define in the external-dns txtOwnerId configuration.

The aws-weight will be used to configure the value of the weighted record, and it will be deployed from Helm values, that will be injected by Terraform in our example, so that our platform team will be able to control autonomously how and when they want to migrate workloads between the EKS clusters.

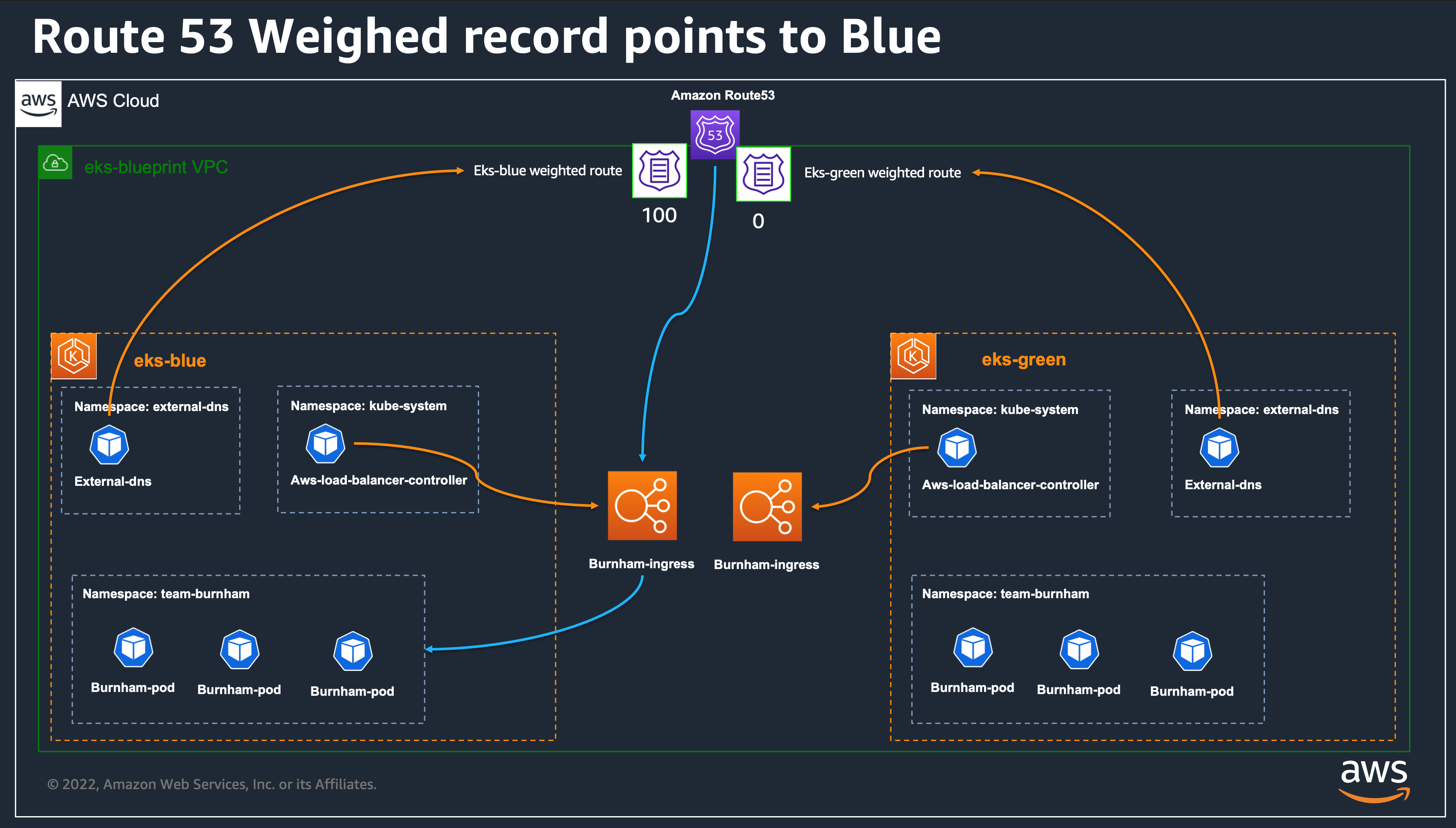

Amazon Route 53 weighted records works like this:

- If we specify a value of 100 in eks-blue cluster and 0 in eks-green cluster, then Route 53 will route all requests to eks-blue cluster.

- If we specify a value of 0 in eks-blue cluster and 0 in eks-green cluster, then Route 53 will route all requests to eks-green cluster.

- we can also define any intermediate values like 100 in eks-blue cluster and 100 in eks-green cluster, so we will have 50% on eks-blue and 50% on eks-green.

Automate the migration from Terraform¶

Now that we have setup our 2 clusters, deployed with ArgoCD and that the weighed records from values.yaml are injected from Terraform, let's see how our Platform team can trigger the workload migration.

- At first, 100% of burnham traffic is set to the eks-blue cluster, this is controlled from the

eks-blue/main.tf&eks-green/main.tffiles with the parameterroute53_weight = "100". The same parameter is set to 0 in cluster eks-green.

Which correspond to :

All requests to our endpoint should response with eks-blueprint-blue we can test it with the following command:

URL=$(echo -n "https://" ; kubectl get ing -n team-burnham burnham-ingress -o json | jq ".spec.rules[0].host" -r)

curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}'

you should see:

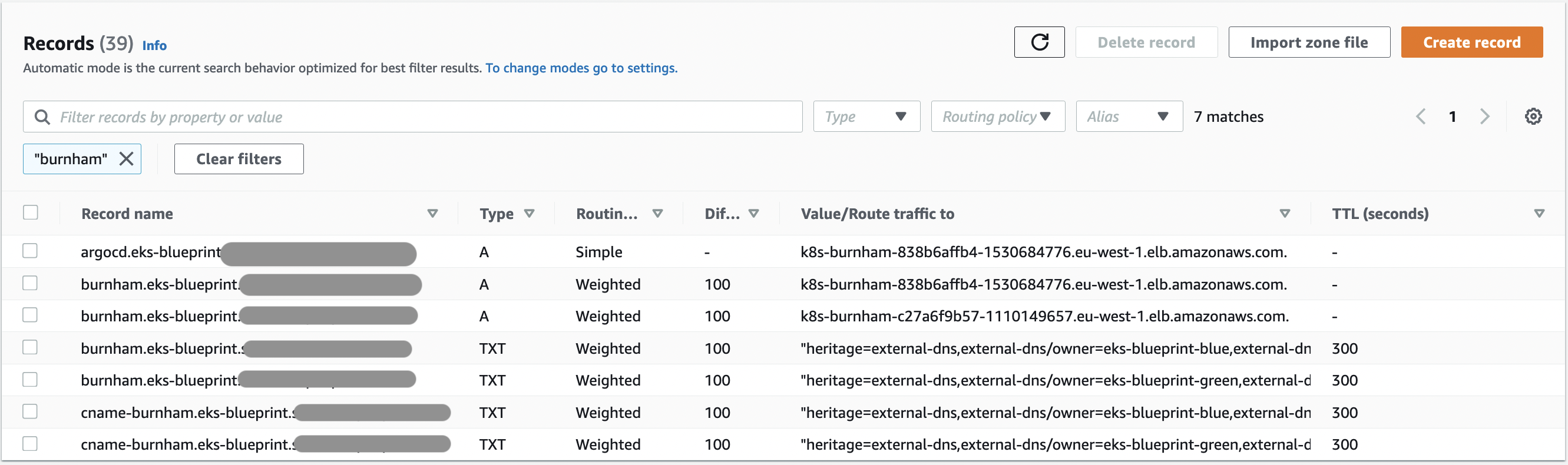

- Let's change traffic to 50% eks-blue and 50% eks-green by activating also value 100 in eks-green locals.tf (

route53_weight = "100") and let'sterraform applyto let terraform update the configuration

Which correspond to :

All records have weight of 100, so we will have 50% requests on each clusters.

We can check the ratio of requests resolution between both clusters

URL=$(echo -n "https://" ; kubectl get ing -n team-burnham burnham-ingress -o json | jq ".spec.rules[0].host" -r)

repeat 10 curl -s $URL | grep CLUSTER_NAME | awk -F "<span>|</span>" '{print $4}' && sleep 60

Result should be similar to:

eks-blueprint-blue

eks-blueprint-blue

eks-blueprint-blue

eks-blueprint-blue

eks-blueprint-green

eks-blueprint-green

eks-blueprint-blue

eks-blueprint-green

eks-blueprint-blue

eks-blueprint-green

The default TTL is for 60 seconds, and you have 50% chance to have blue or green cluster, then you may need to replay the previous command several times to have an idea of the repartition, which theoretically is 50%

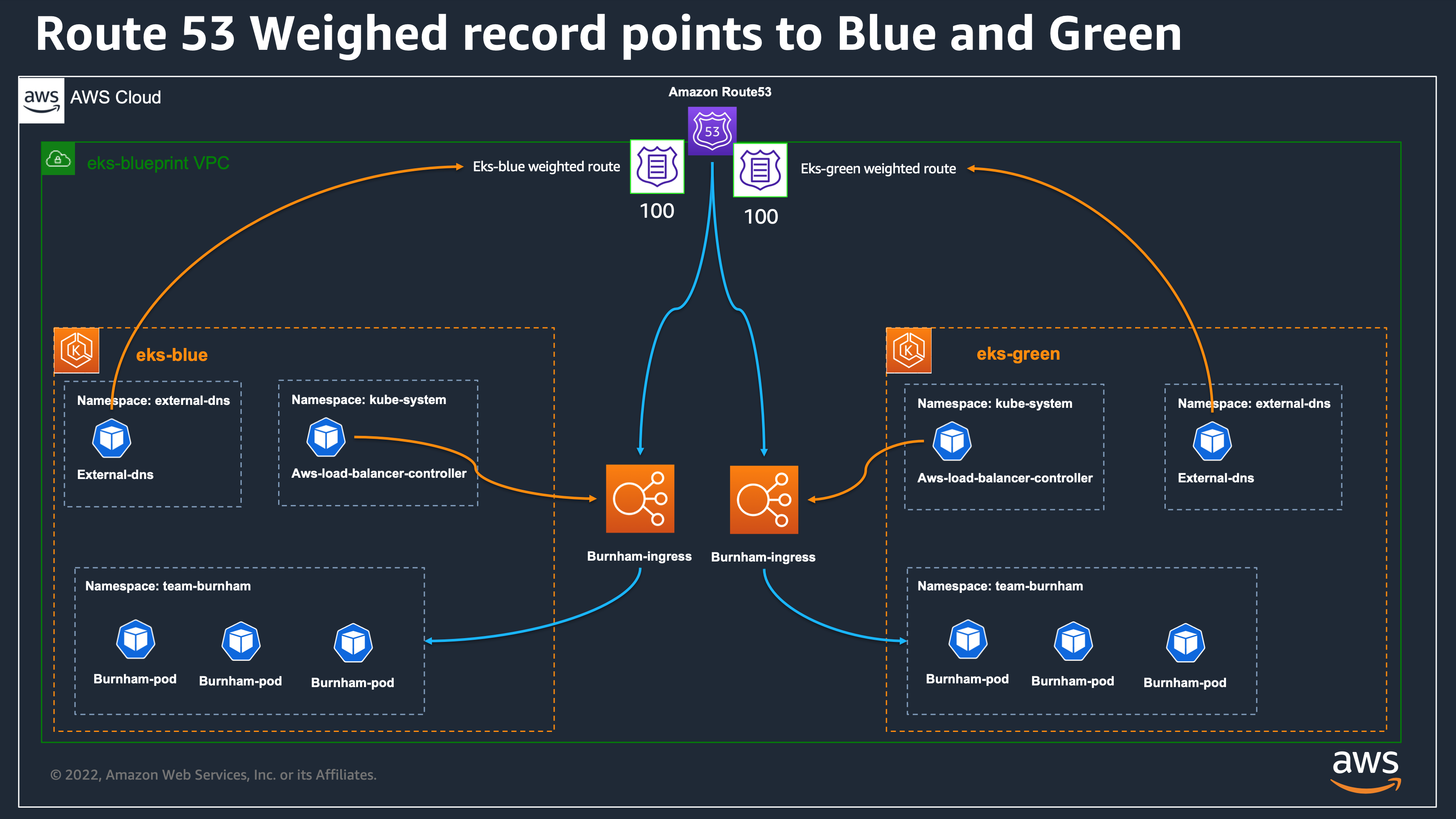

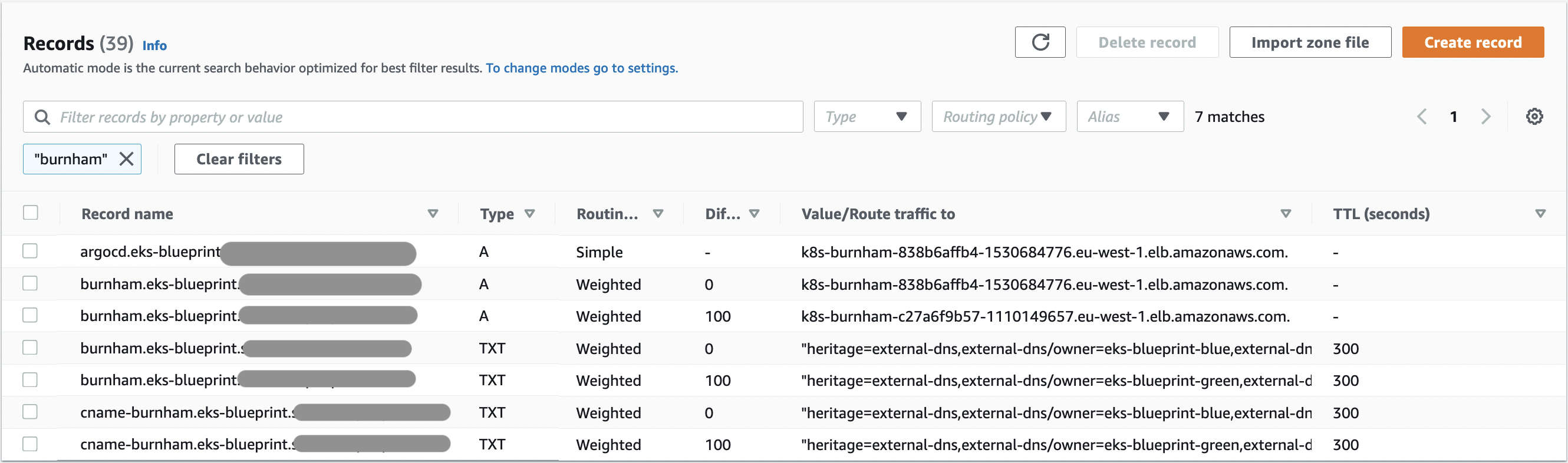

- Now that we see that our green cluster is taking requests correctly, we can update the eks-blue cluster configuration to have the weight to 0 and apply again. after a few moment, your route53 records should look like the below screenshot, and all requests should now reach eks-green cluster.

Which correspond to :

At this step, once all DNS TTL will be up to date, all the traffic will be coming on the eks-green cluster. You can either, delete the eks-blue cluster, or decide to make upgrades on the blue cluster and send back traffic on eks-blue afterward, or simply keep it as a possibility for rollback if needed.

In this sample, we uses a simple terraform variable to control the weight for all applications, we can also choose to have several parameters, let's say one per application, so you can finer control your migration strategy application by application.

Delete the Stack¶

Delete the EKS Cluster(s)¶

This section, can be executed in either eks-blue or eks-green folders, or in both if you want to delete both clusters.

In order to properly destroy the Cluster, we need first to remove the ArgoCD workloads, while keeping the ArgoCD addons. We will also need to remove our Karpenter provisioners, and any other objects you created outside of Terraform that needs to be cleaned before destroying the terraform stack.

Why doing this? When we remove an ingress object, we want the associated Kubernetes add-ons like aws load balancer controller and External DNS to correctly free the associated AWS resources. If we directly ask terraform to destroy everything, it can remove first theses controllers without allowing them the time to remove associated aws resources that will still existing in AWS, preventing us to completely delete our cluster.

TL;DR¶

Troubleshoot¶

External DNS Ownership¶

The Amazon Route 53 records association are controlled by ExternalDNS controller. You can see the logs from the controller to understand what is happening by executing the following command in each cluster:

In eks-blue cluster, you can see logs like the following, which showcase that the eks-blueprint-blue controller won't make any change in records owned by eks-blueprint-green cluster, the reverse is also true.

time="2022-10-10T15:46:54Z" level=debug msg="Skipping endpoint skiapp.eks-blueprint.sallaman.people.aws.dev 300 IN CNAME eks-blueprint-green k8s-riker-68438cd99f-893407990.eu-west-1.elb.amazonaws.com [{aws/evaluate-target-health true} {alias true} {aws/weight 100}] because owner id does not match, found: \"eks-blueprint-green\", required: \"eks-blueprint-blue\""

time="2022-10-10T15:46:54Z" level=debug msg="Refreshing zones list cache"

Check Route 53 Record status¶

We can also use the CLI to see our current Route 53 configuration:

export ROOT_DOMAIN=<your-domain-name> # the value you put in hosted_zone_name

ZONE_ID=$(aws route53 list-hosted-zones-by-name --output json --dns-name "eks-blueprint.${ROOT_DOMAIN}." --query "HostedZones[0].Id" --out text)

echo $ZONE_ID

aws route53 list-resource-record-sets \

--output json \

--hosted-zone-id $ZONE_ID \

--query "ResourceRecordSets[?Name == 'burnham.eks-blueprint.$ROOT_DOMAIN.']|[?Type == 'A']"

aws route53 list-resource-record-sets \

--output json \

--hosted-zone-id $ZONE_ID \

--query "ResourceRecordSets[?Name == 'burnham.eks-blueprint.$ROOT_DOMAIN.']|[?Type == 'TXT']"

Check current resolution and TTL value¶

As DNS migration is dependent of DNS caching, normally relying on the TTL, you can use dig to see the current value of the TTL used locally

export ROOT_DOMAIN=<your-domain-name> # the value you put for hosted_zone_name

dig +noauthority +noquestion +noadditional +nostats +ttlunits +ttlid A burnham.eks-blueprint.$ROOT_DOMAIN

Get ArgoCD UI Password¶

You can connect to the ArgoCD UI using the service :

kubectl get svc -n argocd argo-cd-argocd-server -o json | jq '.status.loadBalancer.ingress[0].hostname' -r

Then login with admin and get the password from AWS Secret Manager: